Facebook does what it was built for

?Dumb Fucks? is how Mark Zuckerberg called his first couple of thousand ?friends? who lavishly shared their personal life on Facebook back in 2004. Listening to the mostly unqualified questions from grey-haired members of Congress during Mark Zuckerberg?s hearing at the US Senate and looking at his releasing smile, he might have though the same thing again of the seasoned politicians in front of him.

To me, the hearing is the world?s most outright and direct example of the impressive gap between the unlimited possibilities of technological evolution and the blatant lack of understanding by the masses.

Here are five reasons why this situation should not come as a surprise.

- Internet = Surveillance

The world-renowned Internet security expert Bruce Schneier once said that Surveillance is the business model of the Internet. Just like a pipe of fresh water needs ongoing monitoring for obvious health reasons and a pipe of sewage needs regular maintenance to properly work, pipes of internet traffic are subject to sophisticated monitoring. The worldwide web is founded on the TCP/IP protocol which serves as a global standard for information exchange. These standards were developed by DARPA, the US military research agency in the late 70ies and were declared the standard for all military computer networking in March 1982. Monitoring traffic from emails to images, from video clips to attached PDFs and from voice messages to animated GIFs has hence been an intrinsic part of the Internet from day one ? and for good reason. Balancing off the exponentially growing volume of traffic is no easy task, keeping out viruses and child pornography as well as protecting our Internet banking transactions are other good reasons. Keeping control on an entire population to stabilize the political system in power is the most fundamental of all reas

ons. Deep packet inspection (DPI) has been one of several standard procedure of scanning and analyzing any information that travels through the Internet and it does exactly what its name suggests. It allows governmental organizations to open and inspect in details any parcel of information traveling from A to B. While the worldwide web is by far the most fantastic media that humanity has ever come up to, it was never meant to be a private space.

- Ten year gap

I once assisted a conference on risk management and international regulations where a leading researcher explained why most top athletes hardly get caught in doping and drug tests: the laboratories who develop new (performance enhancing) drugs are at least ten years ahead of the labs who test the athletes. We can safely assume that the same gap applies to information technology developed by US military organizations and the common knowledge of the world population. In ten years form now, most of us will start to understand what is technically possible and therefore being done through the collection of our own data today.

- Facebook does what it was built for

Once the potential of Facebook as a gargantuan supplier of valuable information was understood by the US intelligence services, the CIA became an early investor in Facebook through its venture capital firm In-Q-Tel. The unprecedented rise of Facebook to become the world?s biggest social network data supplier of private information and enabler of behavioral economics analysis hence is perfectly in line with the hegemony of the United States of America. Advanced knowledge by politicians and the population of what can be done through sophisticated data analytics would be counterproductive.

- We all agree

This is where the magic lies. Imagine a conversation between two Gestapo agents in 1943, both of whom work day and night to spy on suspected individuals and meticulously collect information about them. If one told the other that 70 years from now, billions of people would tell us everything they do, send us photos without being asked and report to us with whom they hang out and where, his friend would burst out in laughter. Yet, this is exactly what happened. Part of Facebook?s ever more complex terms of use read : „by posting member content to any part of the Web site, you automatically grant, and you represent and warrant that you have the right to grant, to Facebook an irrevocable, perpetual, non-exclusive, transferable, fully paid, worldwide license to use, copy, perform, display, reformat, translate, excerpt and distribute such information and content and to prepare derivative works of, or incorporate into other works, such information and content, and to grant and authorise sublicenses of the foregoing. And when it comes to its privacy policy it states that „Facebook may also collect information about you from other sources, such as newspapers, blogs, instant messaging services, and other users of the Facebook service through the operation of the service (eg. photo tags) in order to provide you with more useful information and a more personalized experience. By using Facebook, you are consenting to have your personal data transferred to and processed in the United States.“ In the name of ?free? entertainment and connecting with our friends, we politely and voluntarily do the tedious job formerly done by thousands of intelligence agents. If we agree to use a product for which we don?t pay, we agree that we are the product being used.

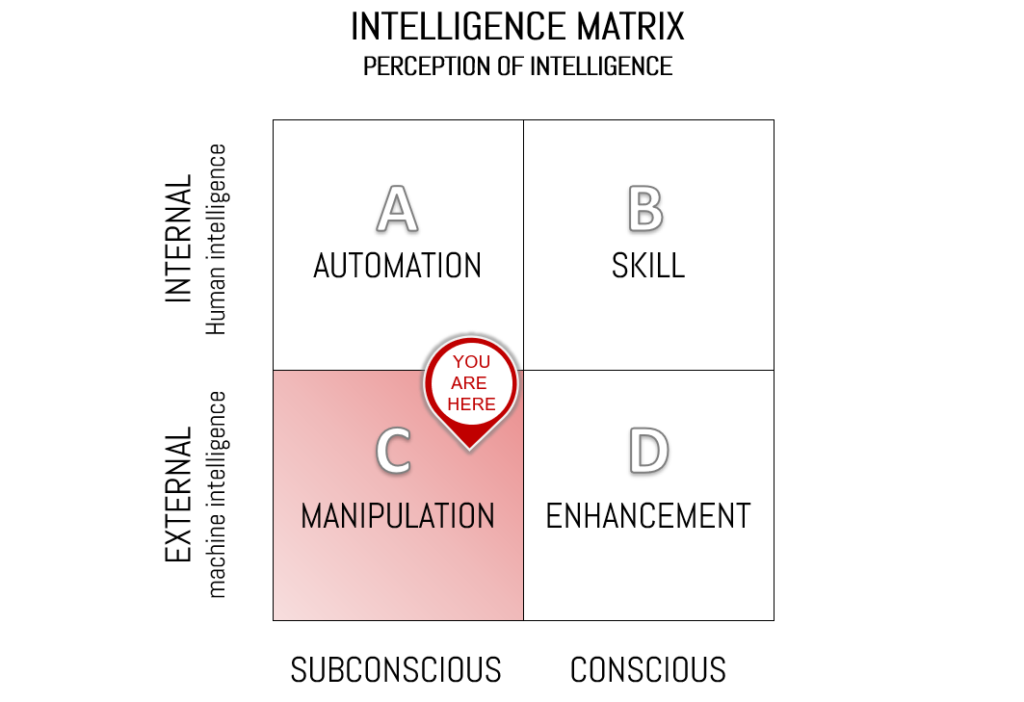

- Out of sight, out of mind

Among the many advantages that come with the digital transformation of our life?s, they also come with pitfalls. The invisibility and intangibility of data is probably the creepiest of them all. We are inherently visual animals relying disproportionately on our eyes to construct our own reality. As soon as we don?t see, we get scared (walking through a forest at day or at night are two incredibly different experiences). And because we don?t see data and we don?t understand what self learning algorithms can predict using our data, we indeed should be scared. As soon as political propaganda becomes visible, it loses its effectiveness. For this very same logic we should do whatever it takes to keep a open dialogue on the subject and to force the world?s data driven media behemoths to tell us how our data is being used.